GitHub AT-AT v1.0.23: Crafting VM-Based GitOps with Packer — No Redstone Required!

I’m excited to share how GitHub AT-AT v1.0.23 is branching into Virtual Machine–based GitOps. Previously, the GitHub AT-AT was geared toward Azure Functions microservices, but this latest extension demonstrates a fresh approach by integrating Packer and Azure Shared Compute Gallery. I wanted to tackle an operational model that, while perhaps more old school than container-based solutions, still provides a reliable framework for everyday workloads — like spinning up a Minecraft server for my son and me. As you know, I have been rapidly expanding the Azure Functions microservices scenario built into the GitHub AT-AT which has been a lot of fun! I am an OG traditional application developer after all so if I’m not building REST APIs or architecting event-driven architectures I’ll no doubt get a little restless!

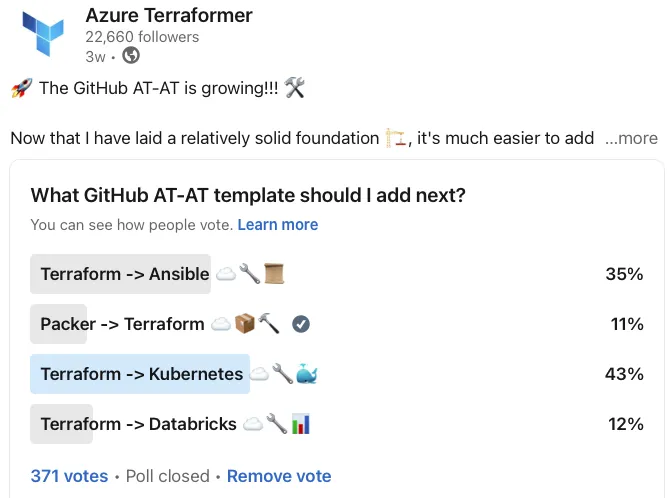

So I took a poll to see what next operational paradigm I should take on within the GitHub AT-AT. Afterall the whole idea behind an AT-AT — that is a Terraform module that automates the automation with Terraform is to remove toil and friction to help app developers and platform engineers (like me) get setup and operate more efficiently (Day 2 ops baby).

I threw out a couple of rather complex Terraform operational environments — usually environments that require a two step process. The community was split between Ansible and Kubernetes — two topics that I have covered on my YouTube channel before.

Ansible directly (see above). Kubernetes, at least from a GitOps standpoint, only from my book, Mastering Terraform — in chapters 8, 10, and 12 — where I implement a Kubernetes GitOps operational model on AWS, Azure and GCP.

The people had spoken! So let it be written! Kubernetes is next!!!

Right?

Unfortunately, my son and I had other plans. My apologies to the unwashed masses. My son and I need a Minecraft server and we need a decent operational model in place so we can keep the thing healthy and make updates. Therefore, in GitHub AT-AT’s vNext, I charted a course to stand up Virtual Machine based workloads. I know, I know… boring that’s not what all the cool kids are doing. Sorry, I don’t care, I wanna play some Minecraft.

Virtual Machine Core

In this release, I introduced two new modules: one called azure-vm-core and another called azure-vm-app. This mirrors the design of the Azure Functions modules. The “core” module focuses on laying down the Azure Shared Compute Gallery. From there, “app” modules can be deployed as many times as you need for various application use cases.

Even though this can support classic three-tier or more elaborate setups, my first application was a Minecraft server, which only needs a single baked image.

The Virtual Machine “Core” environment isn’t really that sophisticated. The code to provision it is almost identical to the “Azure Starter App” and if I think about it, it really should be logically equivalent to the starter app in structure because the only thing we need to specify is the primary Azure region.

module "app" {

source = "../../modules/azure-vm-core"

/* This is the only new input variable!!! */

location = "eastus2"

/* ↓↓↓ ↓↓↓ AT-AT BOILERPLATE ↓↓↓ ↓↓↓ */

application_name = var.application_name

github_organization = var.github_organization

repository_name = var.repository_name

repository_description = var.repository_description

repository_visibility = var.repository_visibility

terraform_version = var.terraform_version

commit_user = {

name = var.github_username

email = var.github_email

}

environments = {

dev = {

subscription_id = var.azure_nonprod_subscription

branch_name = "develop"

backend = var.nonprod_backend

}

prod = {

subscription_id = var.azure_prod_subscription

branch_name = "main"

backend = var.prod_backend

}

}

/* ↑↑↑ ↑↑↑ AT-AT BOILERPLATE ↑↑↑ ↑↑↑ */

}

The only additional environment-specific input variables is the location that you want the Azure Shared Compute Gallery provisioned within. Like the Azure Container Registry, this is only the primary region as the service itself has built-in cross-region replication.

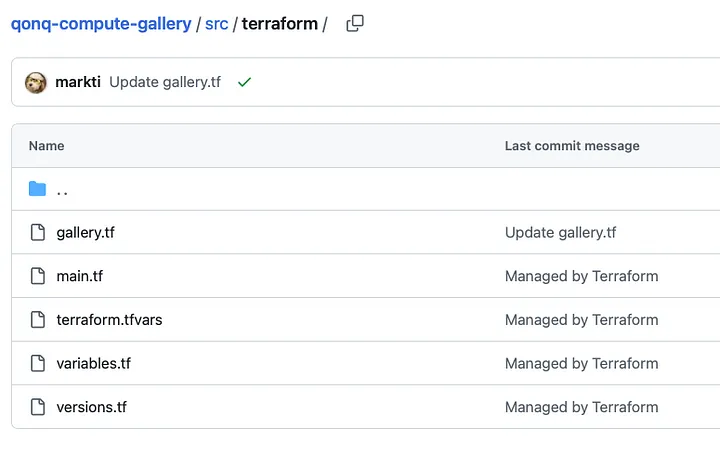

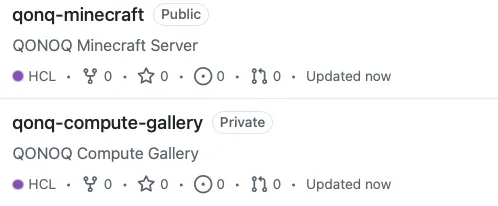

The GitHub AT-AT provisions the a GitHub repository — in this case “qonq-compute-gallery”. In this repository we simply have a fully operational Terraform GitOps environment (heck, they’re getting easier and easier to create now so this isn’t as impressive anymore, is it?) that provisions an Azure Compute Gallery.

It is pretty much exactly like the “Azure Starter App” except that it provisions an azurerm_shared_image_gallery resource. That’s pretty much it.

Virtual Machine App

Just like with the Azure Functions’ Microservices extension for the GitHub AT-AT, once you have your shared infrastructure, in this case, a shared image gallery, you can spin up multiple “apps” that each build and use custom VM images with Packer. The “app” module is where you’ll see more interesting updates. Its Terraform configuration includes references to the gallery you created in the core environment, as well as a new data structure in the environments object. That data structure ties each environment (dev, prod, etc.) to a gallery definition so that your Packer builds know exactly where to put the finished images.

module "app" {

source = "../../modules/azure-vm-app"

location = "eastus2"

image_names = ["ubuntu-minecraft-bedrock"]

vm_size = "Standard_D2_v2_Promo"

packer_version = "1.9.4"

base_address_space = "10.64.0.0/22"

/* ↓↓↓ ↓↓↓ AT-AT BOILERPLATE ↓↓↓ ↓↓↓ */

application_name = var.application_name

github_organization = var.github_organization

repository_name = var.repository_name

repository_description = var.repository_description

repository_visibility = var.repository_visibility

terraform_version = var.terraform_version

commit_user = {

name = var.github_username

email = var.github_email

}

environments = {

dev = {

subscription_id = var.azure_nonprod_subscription

branch_name = "develop"

backend = var.nonprod_backend

gallery = {

name = "galqonqgallerydev"

resource_group = "rg-qonq-gallery-dev"

}

managed_image_destination = "rg-qonq-gallery-dev"

}

prod = {

subscription_id = var.azure_prod_subscription

branch_name = "main"

backend = var.prod_backend

gallery = {

name = "galqonqgalleryprod"

resource_group = "rg-qonq-gallery-prod"

}

managed_image_destination = "rg-qonq-gallery-prod"

}

}

/* ↑↑↑ ↑↑↑ AT-AT BOILERPLATE ↑↑↑ ↑↑↑ */

}

But WAIT!!! It’s not all boilerplate is it? There’s a small but noteworthy change in how I handle variables for each environment. Rather than keep the image gallery definition in a separate map, I embedded it directly into the environment block so everything stays in one place. I debated whether that was the best approach — maybe a dedicated “galleries” map keyed by environment would be cleaner.

Nay, I modified the environments object structure to include the following data structure:

{

gallery = {

name = "galqonqgalleryprod"

resource_group = "rg-qonq-gallery-prod"

}

managed_image_destination = "rg-qonq-gallery-prod"

}

Too much? Maybe. What do you think? Should I have created a separate “galleries” input variable that was also a map that also used the environment_name as the key? Right now, I’m content with the current layout, but I’d love any thoughts on whether a different approach would be “better or worse.”

First Things First: Attaching the Image Definitions

Before I can start rocking this app though, I need to have provisioned the azurerm_shared_image resource within the Azure Shared Compute Gallery. I was torn where to put this. Should this be done by the “app” itself? You know how I like to have attachable / detachable infrastructure components. It would be great if the “app” could easily attach and detach itself completely autonomously from the gallery.

However, I ended up just dropping the image definitions in the “core” repository and taking advantage of that sweet, sweet Terraform GitOps to provision it.

A “Real-World” Application: Minecraft

I know some folks are eagerly awaiting Kubernetes or Ansible integrations, but I decided to scratch a personal itch first — hosting a Minecraft server for my son and me.

It’s not as glamorous as a cloud-native microservices project, but the process still highlights how the new VM-based approach can be leveraged for any general-purpose application. With the shared gallery and Packer-based baking pipeline, I get a stable, version-controlled VM image that I can deploy reliably.

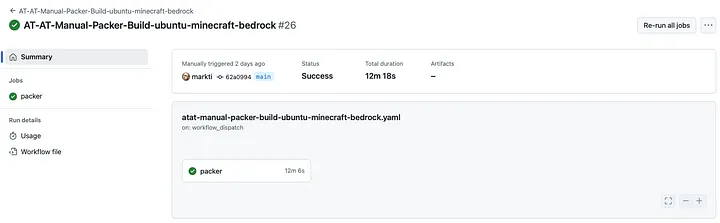

Once that is done, my “app” environment is free to bake its images using Packer. In order to accomplish that, I added a GitHub Action workflow to do a Packer build and Terraform to use the image to provision a simple Virtual Machine environment.

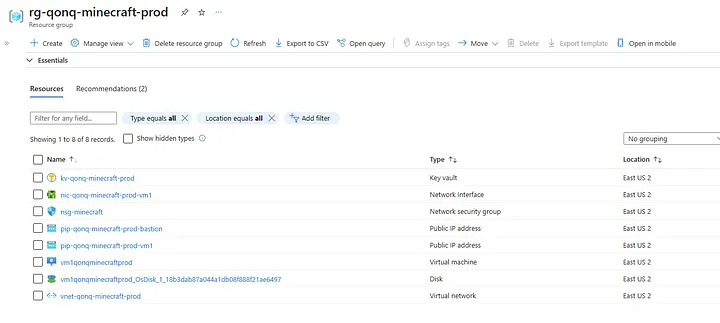

Here is the stuff it provisions:

If you follow my new Udemy course you’ll learn how to setup a similar environment from scratch — piece by piece. It’s called Terraform 101: Azure Edition. It’s a great place to start for somebody new to working with Terraform — and/or new to working with Microsoft Azure.

Conclusion

These two new modules for Virtual Machine-based workloads expand the GitHub AT-AT’s footprint beyond the previous microservices focus by automating the creation and management of Virtual Machine images in conjunction with a fully operational Packer / Terraform based GitOps workflow.

It’s especially useful for scenarios where container-based solutions might be overkill or when you just need direct VM control. Whether you want to stand up a production-ready workload or something simpler, like a Minecraft server, you now have a straightforward path to replicate and roll out consistent virtual machine images across your environments.

As always, I’m eager to keep the conversation going, so don’t hesitate to share your feedback on how you might organize or refine these new features.

Happy Azure Terraforming!