Hosting a Console Application as a Guest Executable in Service Fabric

I’ve been experimenting with the efficiency of Guest Executable workloads hosted in Service Fabric. One of the key benefits of Service Fabric is that you can host applications that weren’t originally designed for the cloud but enable them to be hyper scalable. That’s great but could it be done with an application that was designed without even the web in mind? An application that was built to be used locally on an end user’s machine and interacted with using a mouse and keyboard (or even the command line)?

I decided this was a worthy challenge to see if it could be done. The first application that popped in my head was Handbrake. For those of you that don’t know, Handbrake is the end-all-be-all for video encoding software. It’s a great utility that can take DVDs, VCDs, existing videos and re-encode them into pretty much anything that you want.

It is an open source, cross platform project that has compilations on a multitude of platforms. I first discovered it on Mac OS X when I was looking for a utility that could make digital copies of my DVDs so I could play them on my iPhone or iPod Touch. It worked great. It has a nifty GUI but also has a CLI (command line interface) so you can also script your encodings.

This seemed like a good example of an existing executable that does something I wouldn’t want to re-write in C# as a stateless Microservice. The good folks at the Handbrake open source project have already done a fabulous job making a fast and efficient video transcoding utility. Why re-invent the wheel? But the question is, would I benefit from hosting it in an Azure Service Fabric cluster? Would it be just as efficient? Would it churn through those video encodings faster than on a single PC? Would it even work?

The Problem

Host Handbrake as a guest executable so I could encode videos without re-writing the complex video transcoding algorithms as a stateless Microservice.

Technical Challenges

In order to get this to work, I needed a couple things.

First, I needed a method to invoke Handbrake. Service Fabric was not designed to host guest executables that are ‘run-and-done’ EXEs. Handbrake was designed to be a console application that takes in command line arguments, did some processing, and terminated after processing was complete.

Second, I needed a place where Handbrake could both read original source materials (DVD data files, video files, etc.) and save the output media files that were produced. Handbrake, being designed to run on a single PC, expects read/write access to a file system path where the source materials are where it can save the output materials.

The Solution

I would build a ‘Kickstarter app’ that would run on an infinite loop, receive messages and translate those messages into an invocation of the HandBrake console application. I would setup a network share that all my Service Fabric nodes could access directly through a locally mounted drive.

Kickstarter App

HandBrake doesn’t listen to ports or monitor a queue. It is a ‘run-and-done’ EXE and is designed to terminate. My Kickstarter app will need to be a long-running EXE and have some mechanism to receive messages that can be used to transition execution to the HandBrake.

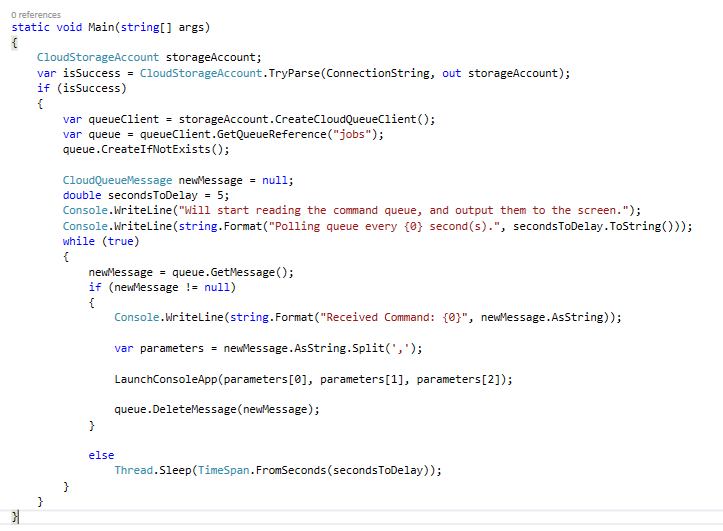

The first way that I thought to do this was to just create a simple Azure Storage Queue and monitor it from the Kickstarter app. When the Kickstarter app gets a message, it’ll parse the message and execute HandBrake through the command line interface.

Monitoring the Azure Storage Queue is drop dead simple:

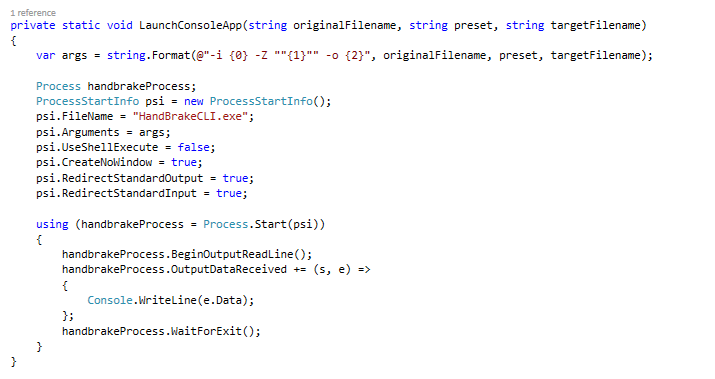

The invocation of HandBrake is even more straight forward.

The only tricky bit was getting the Standard Output from the Console Application sent to my own console so I could see what was happening.

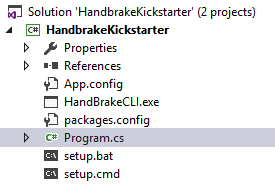

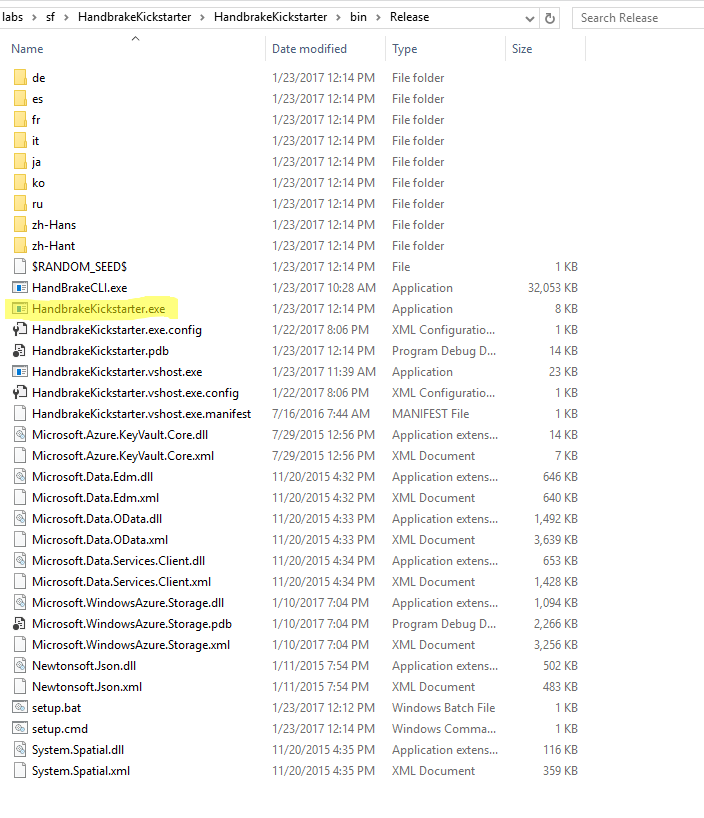

As you can tell, there isn’t a whole lot to my Kickstarter app. The solution looks like this:

Program.cs contains the main method and all the code. I added HandBrakeCLI.exe to the solution mainly just to package it up. It’s build type is set to Content and to copy to the build directory. The same goes for the ‘setup.bat’.

When this builds it gets spit out into the Release folder and looks like this:

My Service Fabric application will use “HandbrakeKickstarter.exe” as its main executable. As you saw in the above code, this application basically runs in an infinite loop checking for new messages in the queue and processing them by invoking the “HandBrakeCLI.exe” with some command line arguments.

The Network Share

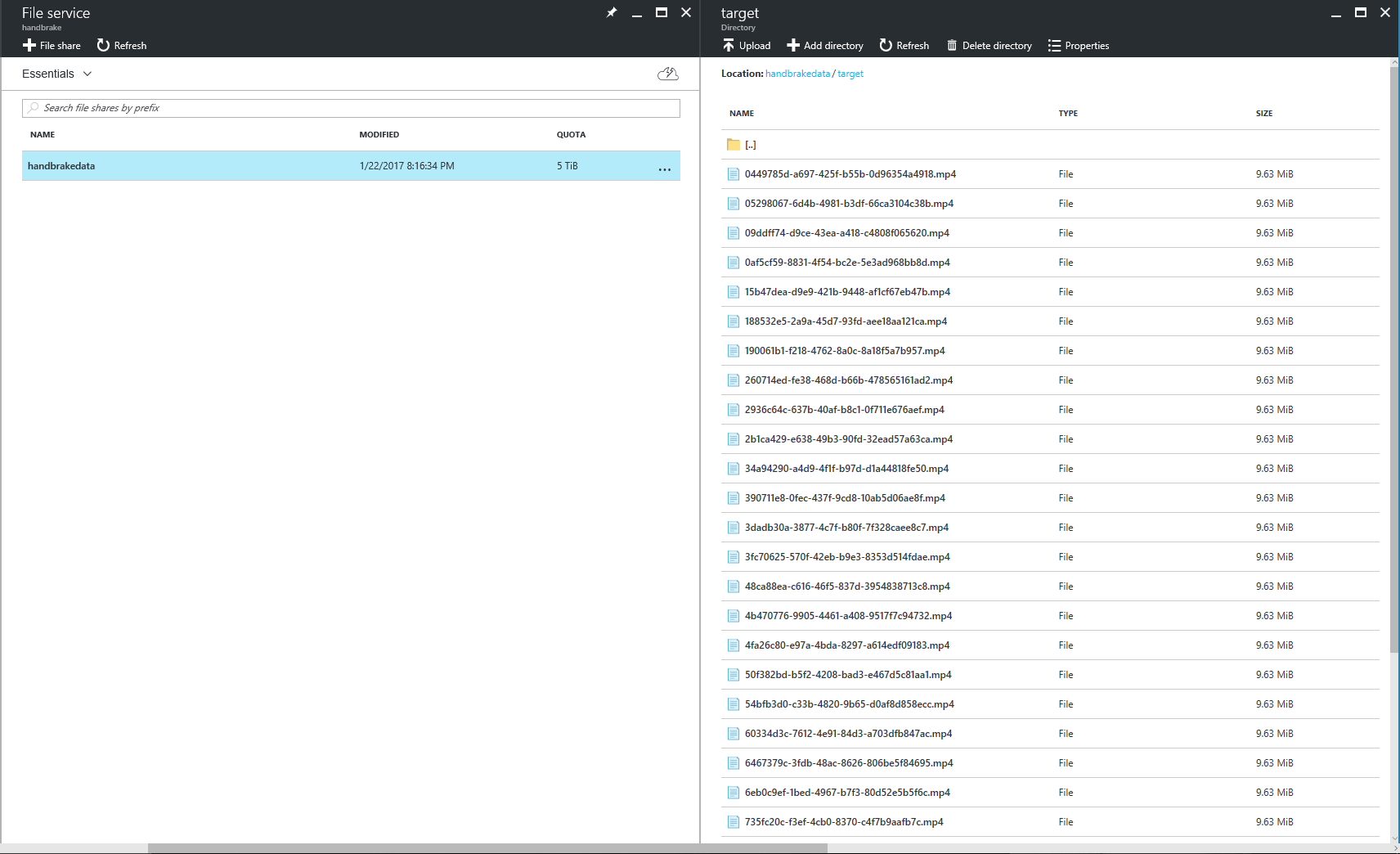

This was a bit tricky. I figured the easiest course of action was to use Azure Files (a relatively new feature of Azure Storage) that essentially allows you to create a network share on an Azure Storage Account.

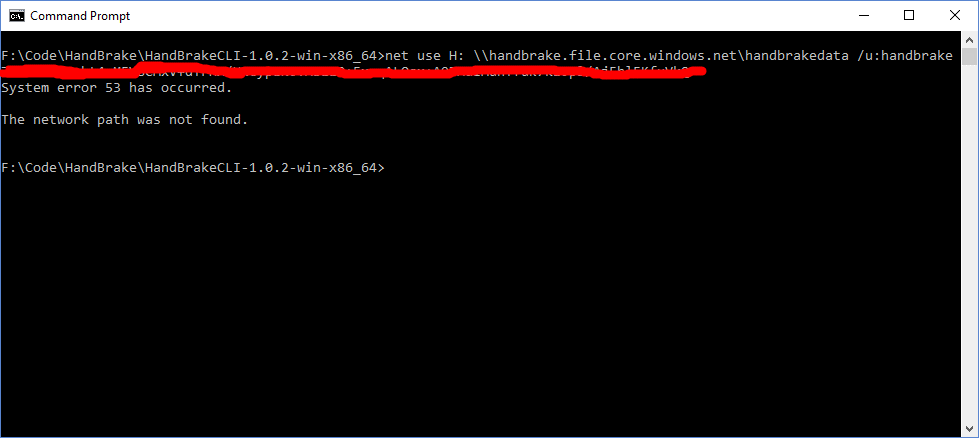

I had some trouble mounting my Azure Files network share locally. This might be because my ISP (AT&T) has blocked port 445 (I read online this was a common practice for most ISPs, you know, for ‘reasons’). I kept getting this ‘System error 53 has occurred’ and ‘The network path was not found’.

I didn’t really want to go through the hassle of talking to AT&T about it. 99% chance their tech support would have no clue what I was talking about and I would spin my wheels for 4 hours and have nothing to show for it.

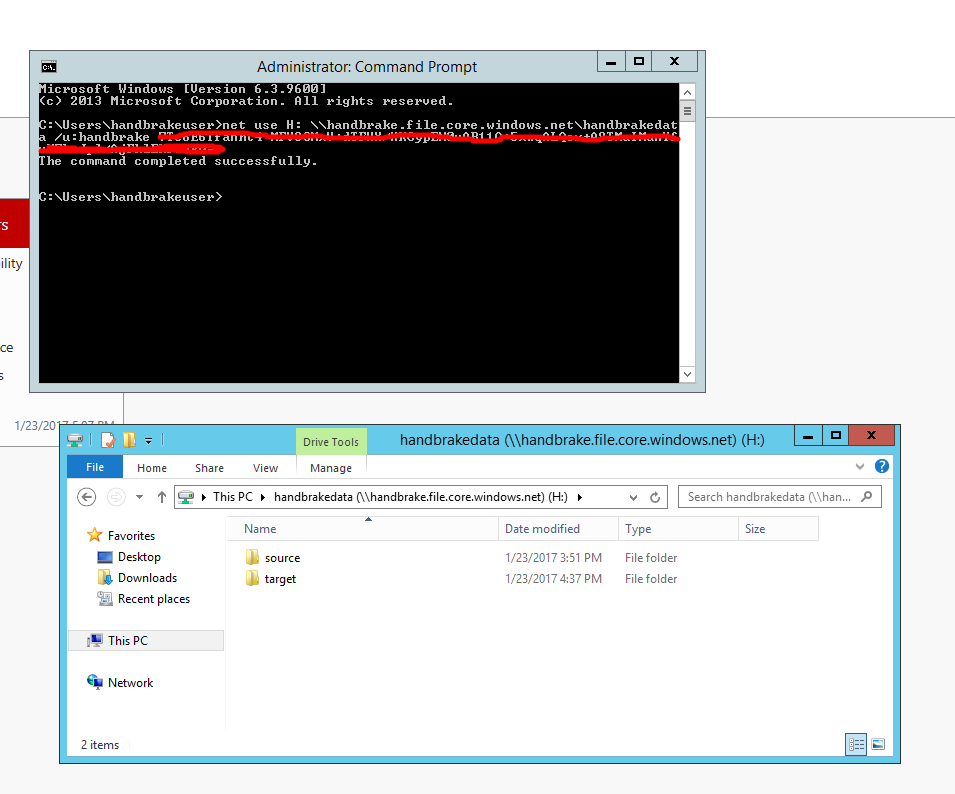

So, just to ensure that my share was working correctly in Azure, I provisioned an Azure Virtual Machine to test the Azure Files network share.

Low and behold, it worked brilliantly.

Now, the question was: ‘How do I get my Service Fabric cluster to mount this share drive for all nodes so that HandBrake can write to it?’

I found this article that provided some very high level guidance about migrating Worker Roles to Service Fabric. It was so shallow it was almost useless. However, it did get me pointed towards SetupEntryPoint (which is the right place to launch startup tasks on Service Fabric). However, what the article neglected to mention was that depending on what your startup tasks are doing you may need to specify a RunAsPolicy within the ApplicationManifest.xml.

Here is what I did:

Created a BAT file called ‘setup.bat’ (you saw this in my Kickstarter app’s solution earlier) and added it to my guest executable code folder. Inside this setup.bat file I am doing a NET USE statement to map a network drive to my Azure Files network share.

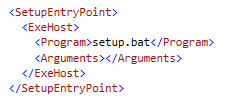

I added this to the ServiceManifest.xml file:

This will result in ‘setup.bat’ to be launched before my Kickstarter app is launched.

However, when I published this to my Service Fabric cluster, I got errors. Really, not useful, errors. My favorite kind. ^_^

What could possibly be causing a NET USE statement to fail? I mean, I just tested it on an Azure Virtual Machine and it worked perfectly. The exact bat file, character for character. So what could be the problem? Security.

I figured that whatever user Service Fabric was using to execute the ‘setup.bat’ (i.e. the Setup Entry Point) must not have sufficient privileges so I investigated how to specify those privileges. It’s pretty straight forward once you know the right XML.

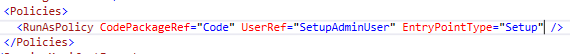

In the ApplicationManifest.xml file I added the following

This specified that a user profile (yet to be created) called SetupAdminUser would be used to invoke the ‘Setup’ Entry Point (i.e. my ‘setup.bat’ script that has the NET USE statement in it).

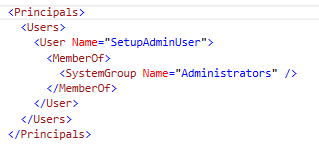

Then I added this into the root node of the ApplicationManifest.xml:

Make sure to add it after the DefaultServices node. Not sure why but it will make it impossible to deploy the application to your cluster. Apparently there is some sort of XML Scheme issue with the order of this node.

So I publish to Service Fabric and what happens?

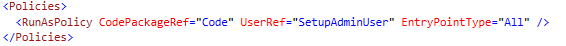

Well, no errors this time but HandBrake isn’t doing anything. So, I wonder what might be the issue. Then I realize that in Windows, when you mount a drive, you usually mount it only for yourself–not all users–so I took a wild guess and changed the RunAsPolicy to specify the EntryPointType of “All” instead of Setup. That way, the Kickstarter app will run under the same identity as the ‘setup.bat’. Not ideal from a security standpoint but I just wanted to see if it would work.

It did! Now, all I have to do is queue up new messages on my Azure Storage Queue and HandBrake will encode the video for me. I threw some load at my Service Fabric cluster and it tore through it well enough! ^_^

Now, if you’ll excuse me I have to go delete my cluster before my bill goes nuts!