Azure Speech API is a game changer

For advanced speech based use cases never before has so much power been put in the hands of developers. Whether you are transcribing customer calls to improve your customer service or using chat bots to create new speech based interfaces with your employees the Azure Speech API has a sophisticated feature set that opens up a flurry of new opportunities in enabling the now burgeoning user input method: voice.

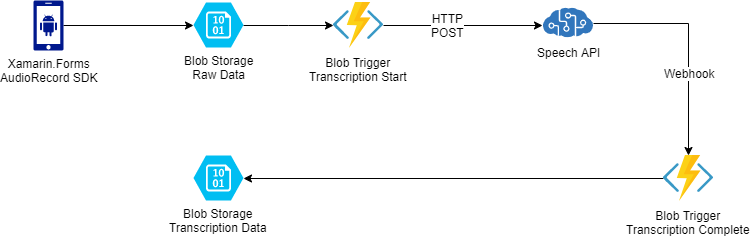

I setup an architecture using Azure Functions and the Azure Speech API to test it out and was quite impressed.

The solution consists of the following components:

- Xamarin.Forms app that uses low level Android Audio SDK to encode WAV audio files and upload them to Azure Blob Storage

- Azure Function that utilizes a BlobTrigger to invoke anytime a file has been uploaded and submit the file to the Azure Speech API for transcription

- The Azure Speech API then transcribes the WAV audio file and posts the results to another Azure Function via WebHook

- Finally, the Webhook extracts the transcription results and stores them into another Azure Blob storage file for later use

The Azure Speech API also has some advanced features that can be used to enhance the audio transcription, allowing you to submit different models to increase accuracy for an individual speaker’s voice or a set of industry-specific pronunciations for vernacular/acronyms that might be troublesome for a general purpose text-to-speech engine.

This offering from Azure is quite compelling and has broad applications across a number of industry verticals. Looking forward to digging deeper into the modeling capabilities to work with overcoming accents and obscure vocabulary that might through the engine off.