GitHub AT-AT Now Supports Active-Active Azure Functions Scenario

I have developed a new module in the GitHub AT-AT designed to provision a multi-region active-active Azure Functions core environment. This new module significantly expands on the original GitHub AT-AT capabilities by adding a scenario that focuses on automating a hosting environment for an active-active Azure Functions solution. Just like the existing dual backend capability, which enables multi-region infrastructure for reliability and scalability, this active-active Azure Functions core scenario will serve as the foundation for even more complex use cases. Specifically, it will allow you to provision multiple Function Apps that will benefit from a shared infrastructure layer, providing the compute, observability, security, and general resource management that you need to run resilient and globally distributed serverless workloads.

Throughout this article, I will describe the enhancements I made to various modules, how I’ve leveraged GitHub Actions and Terraform to streamline deployments, and the overall steps you can follow to implement this scenario in your own environment. By the time we are done, you’ll have a solid understanding of the new sub-module, the enhancements to the core components, and exactly how to get it up and running.

Enhancements to the Core Modules

When adding this new scenario to the GitHub AT-AT, I took the opportunity to refine and enhance some of the original core modules. The most notable enhancement involves modifying the “Apply on Push” workflow. I introduced GitHub Actions concurrency controls on the workflow so that it won’t run multiple times whenever you push changes to the main branch. This streamlines the process, reduces potential conflicts, and ensures that you maintain a clear and deterministic deployment workflow. It’s a subtle change, but one that significantly improves reliability and predictability, which is exactly what we need when automating complex infrastructure scenarios like multi-region active-active solutions.

Another key improvement was the extraction of GitHub Environment configuration into a new module called environment-terraform-azure/github. This new module provisions the Authentication Context, Backend Context, and Terraform Execution Context across all user-defined environments. Whether you define environments as DEV, TEST, or PROD, this module ensures that your Azure environments are consistently bootstrapped and ready to be consumed by the rest of the pipeline. It centralizes the logic so that I no longer have to replicate environment-specific configuration for each scenario or repository. Although I haven’t yet back-ported this to the “Azure Terraform Starter App,” I fully intend to do so later. For now, it acts as a drop-in replacement for the Azure Functions Core Template, allowing me to avoid packaging this module as yet another repository. By doing so, I maintain a leaner codebase with more reusable components that are easier to reason about and maintain over time.

I have also introduced a new manually triggered GitHub Actions workflow that allows you to “Break the Lease” on a particular environment’s Terraform State file. This is a break-glass scenario meant to be used sparingly — only when something has locked the state or is otherwise preventing you from making further changes. While this feature will rarely be needed in a well-run environment, having the capability to forcibly unlock the state file ensures that you can recover from unusual circumstances quickly without resorting to manual intervention through the backend itself.

Introducing the New Sub-Module

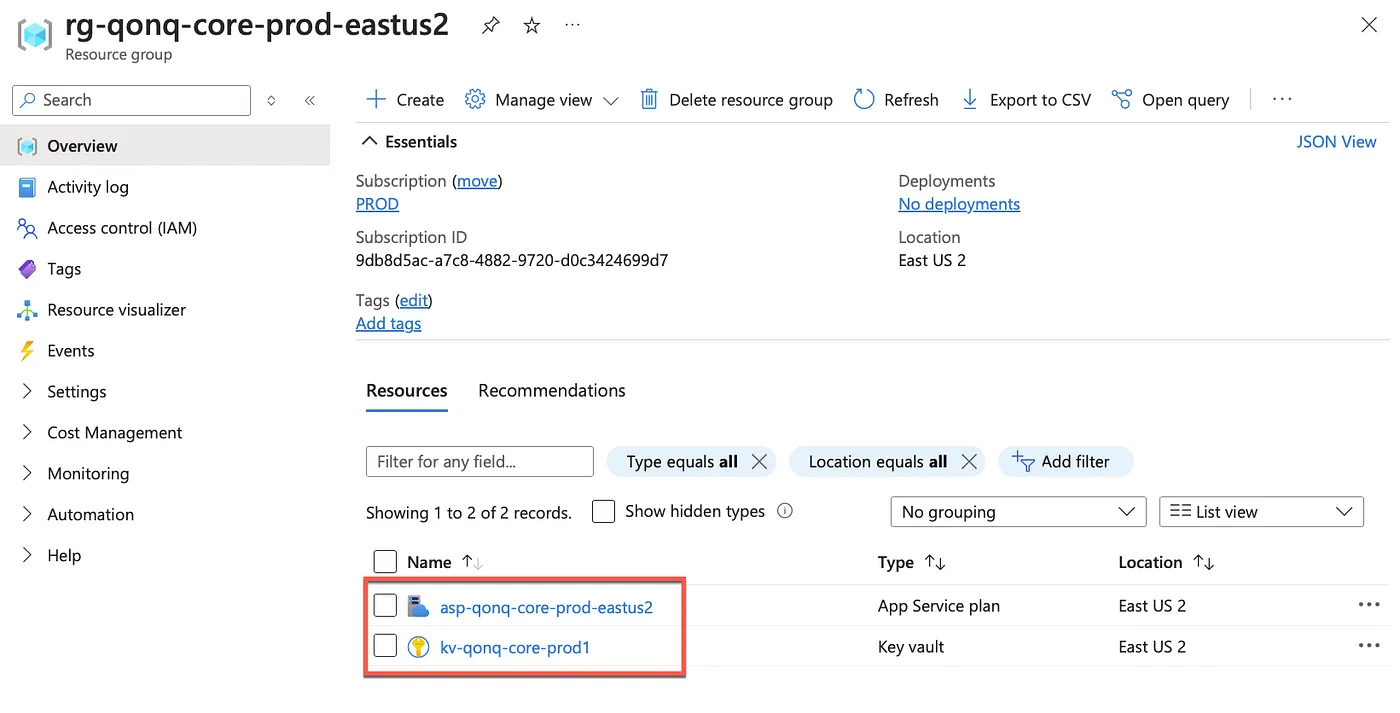

The new sub-module that I’ve developed aims to provision a core multi-region active-active Azure Functions environment. This environment sets the stage for hosting numerous Azure Functions workloads that can be deployed on top of it. Rather than building each Function App’s infrastructure stack from scratch, we provide a shared core that includes App Service Plans, observability components like Application Insights, a Log Analytics workspace, KeyVault integration for secrets management, and more. By reusing this shared core infrastructure for each additional Function App scenario, we ensure that all workloads receive consistent observability, security, and governance controls without requiring each team or project to reinvent the wheel.

To implement this scenario, I leverage the broader GitHub AT-AT GitFlow implementation that underpins much of the existing repository structure. In doing so, I’ve made some pretty significant enhancements to the foundational modules while adding this new scenario. The result is a more cohesive, integrated solution that uses Infrastructure-as-Code principles to deploy everything from the GitHub repository to the Azure infrastructure in a consistent, automated manner.

The Root Module and the Regional Stamp

At the heart of this new scenario sits the Root Module for the Azure Functions core environment. This Root Module acts as a pass-through for many input variables that drive the configuration of the Azure Functions App Service Plan and related resources.

One of the design philosophies I’ve embraced is the use of local modules to create solution-specific modules that each represent a regional stamp. By doing so, each region can be represented as a clean, self-contained unit of infrastructure, defined by a module block in the Terraform configuration. You only need to adjust a couple of parameters — like location and number—to create a new region. This makes scaling out to multiple regions a matter of simple copy-paste and slight edits, rather than re-architecting the entire solution each time.

Below is an example of how we define a primary regional stamp within the Root Module:

module "region_stamp_primary" {

source = "./modules/regional-stamp"

location = var.primary_location

name = "${var.application_name}-${var.environment_name}"

number = 1

os_type = var.os_type

sku_name = var.sku_name

tags = local.all_tags

secrets = {

"ApplicationInsights-InstrumentationKey" = azurerm_application_insights.main.instrumentation_key

"ApplicationInsights-AppId" = azurerm_application_insights.main.app_id

"ApplicationInsights-ConnectionString" = azurerm_application_insights.main.connection_string

}

terraform_identity = {

tenant_id = data.azurerm_client_config.current.tenant_id

object_id = data.azurerm_client_config.current.object_id

}

}

Here, the region_stamp_primary module references a local ./modules/regional-stamp directory, passing in variables that define the region, the application name, the environment name, and various configuration values. You’ll notice we set a secrets map that includes application insights settings. These values are generated by resources in the Root Module and passed down to the regional module so that each region’s Function Apps can leverage a shared observability stack. This keeps everything consistent and ensures that your multiple regions have the same baseline instrumentation and metrics.

The terraform_identity block is a simple DTO (Data Transfer Object) that collects the tenant and object IDs that Terraform needs to configure KeyVault and create role assignments. This is critical for ensuring that Terraform can securely store and manage secrets within KeyVault as well as assign the required access roles to Azure resources. The simplicity of the design means you can add new regions by simply repeating this block, adjusting the location and number parameters accordingly, and pointing to the same underlying modules.

I have also changed the subscription permissions granted to the Terraform-managed Entra ID identity to “Owner” so that it can create Role Assignments. This level of access is needed because we want Terraform to control resource permissions dynamically. By granting “Owner,” Terraform can handle complex provisioning tasks that include security roles for KeyVault secrets. While this might not be necessary in simpler configurations, the complexity of active-active scenarios and shared infrastructures often requires this level of flexibility and automation.

Moving From the Starter App to the New Scenario

To leverage this variant of the “Azure Terraform Starter App,” all you have to do is reference a different sub-module in the GitHub AT-AT called azure-fn-core. This sub-module is where the bulk of the infrastructure magic happens. Here’s what that might look like:

module "app" {

source = "../../modules/azure-fn-core"

application_name = var.application_name

github_organization = var.github_organization

repository_name = var.github_repository_name

repository_description = var.github_repository_description

repository_visibility = var.github_repository_visibility

terraform_version = var.terraform_version

primary_location = "eastus2"

os_type = "Linux"

sku_name = "Y1"

retention_in_days = 30

commit_user = {

name = var.github_username

email = var.github_email

}

environments = {

dev = {

subscription_id = var.azure_dev_subscription

branch_name = "develop"

backend = var.nonprod_backend

}

test = {

subscription_id = var.azure_dev_subscription

branch_name = "release"

backend = var.nonprod_backend

}

prod = {

subscription_id = var.azure_prod_subscription

branch_name = "main"

backend = var.prod_backend

}

}

}

All of the input variables closely match those in the original starter app. The difference now is that we’re actually provisioning App Service Plans and the observability stack along with the pipeline setup. For that reason, a few more parameters are available and need to be configured. The os_type controls the environment that your Functions runtime will use across all regions, which keeps the environment consistent and less prone to subtle discrepancies. Similarly, sku_name dictates the scale and pricing tier of your App Service Plan. In this example, “Y1” is used to specify the Azure Functions Consumption plan, meaning you’ll have zero upfront cost and only pay per function execution. This is a great baseline for development, testing, or even production workloads that require elastic scale without steady-state costs.

The retention_in_days parameter configures the Log Analytics workspace retention period that underpins Application Insights and other observability services. By controlling the retention period, you can balance cost against historical observability needs. Storing logs and metrics for longer can be beneficial for troubleshooting complex issues that only appear intermittently, but it also comes with additional storage costs. With Infrastructure-as-Code, you can easily adjust these parameters later, commit the changes to version control, and rely on GitHub Actions plus Terraform to apply them consistently across your environments.

Conclusion

With the introduction of this new active-active Azure Functions core environment module, I’ve taken the GitHub AT-AT on the first step of its journey to the next level. We now have a fully automated, easily extendable scenario that not only creates a shared infrastructure baseline for Azure Functions workloads, but also encourages best practices in DevOps, Infrastructure-as-Code, and multi-region resiliency.

The enhancements to the core modules — such as improved workflow concurrency controls, centralized environment configuration, and the break-glass scenario for Terraform State — make managing complex environments more straightforward and reliable.

Meanwhile, the approach to provisioning regions and scaling out capacity by simply reusing local modules and adjusting a handful of parameters keeps complexity down and consistency up.

Going forward, this scenario will serve as a foundation for building and deploying individual Function Apps that share the same infrastructure, observability, and compute, making it easier than ever to run resilient, globally distributed serverless applications on Azure.