Building a Baseline Azure Environment with Terraform

Not everybody has the luxury of starting fresh, but if you’re going to start fresh and want to keep it simple but still have a good foundation from which to work in the enterprise, this is an excellent place to start.

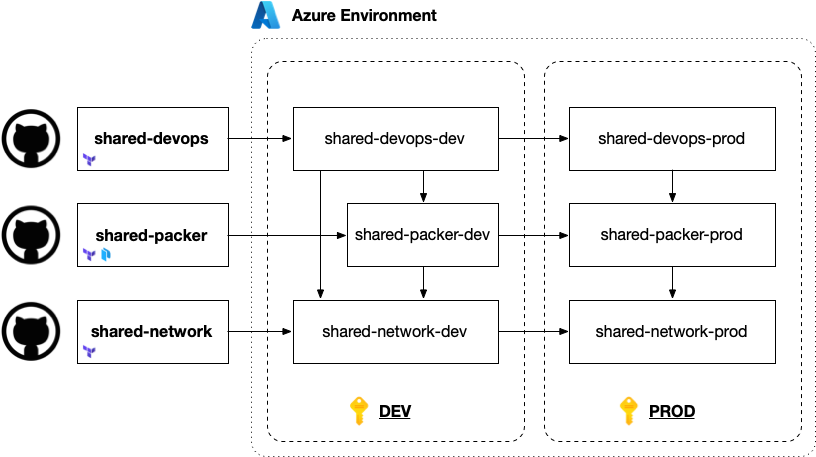

This article details a baseline Azure DevOps environment that leverages a layered architecture, allowing for secure image building, provisioning with Terraform, and controlled access to Azure services, all while prioritizing modularity and network isolation.

The Bedrock of any Infastructure

The network layer is at the core of this architecture, forming the foundational base upon which all other components are built. There are two ways networks are built and managed with Infrastructure-as-code.

First, you have the ad hoc solution networks that are provisioned with the solution they host. Sometimes, in the wild, Wild West of cloud, small solution-oriented networks pop up like mushrooms—ungoverned, disconnected from each other and the rest of the enterprise. One of the most prominent challenges organizations go through is trying to wrap their arms around all these micro-solutions and bring some consistency and, dare I say it, conformity to the unruly masses.

Second, you have the core central network the broader organization uses to connect these disparate systems. Unfortunately, due to cloud-first initiatives often “bucking the system” and seizing the opportunities of the cloud to bring their solutions to reality rapidly, there is often a lack of governance and, more importantly, discipline in place when these initiatives are started and even before they are put into production.

The Problem: Spaghetti Networks

Unfortunately, most often, teams, be they small companies with only a single team or an incubator at a large company that is purposefully designed to operate like a small company to push the needle and explore an idea—eventually, regardless of where they start, if they are successful they will ultimately have to “grow up”. This is a good thing and a natural growing pain of a successful team—but it’s not easy, and it can often feel like you are a victim of your own success.

Often, that means locking down your environment by using all private endpoints. However, in order to do that, you need to have a reliable way to connect all your services together and give your environment operators reliable access to the environment.

That means you’ll need VPN connectivity in some way for your teams to connect to your environment. You can set up a Virtual Network and attach a VPN Gateway to it, but then you’ll have to deal with VNET peering to branch out and connect to the other networks from the network your VPN clients get dropped off in. As your network grows, manually configuring VNet peering across multiple VNets and VPN Gateways becomes challenging, potentially creating a ‘spaghetti network’ effect where managing connections, routes, and security can become complex.

The Solution: V-WAN

These growing pains could be avoided if teams leveraged one of the greatest Azure services: Azure Virtual WAN—or V-WAN for short. With V-WAN, you can establish a solid foundation and scale your network geographically as needed. The pricing includes a sunk monthly cost due to the hourly rates for Virtual Hubs, VPN Scale Units, and Connection Units. This means that the costs are incurred for every hour the P2S VPN Gateway is deployed, regardless of whether or not clients are actively connected. To get a bare minimum environment for a single region Virtual Hub and P2S VPN Gateway will look like this:

| Service | $ / Hour | $ / Month |

|---|---|---|

| Virtual Hub | $0.25 | $187.50 |

| VPN P2S Scale Unit | $0.361 | $270.75 |

| VPN P2S Connection Unit | $0.013 | $9.75 |

These costs by themselves seem like a lot but once you think about how they will help your scale your networking environment by centralizing key networking services like VPN and Firewall across your wider network it starts paying off. You should not be setting up a V-WAN for every tiny project or initiative your team or organization takes on. The V-WAN should be thought of as shared infrastructure. It’s an interstate highway, your application’s Virtual Networks are the “in town” side roads.

Managing your V-WAN’s Connections

The network is the first element deployed, as all other layers depend on it for connectivity and security. This foundational network layer should be kept isolated—both to keep the code simple and easily understandable, but also to reduce the audience that can make changes to it and to decouple it from changes to other infrastructure components both shared and application-specific. Think about this as creating a bit of a moat around the Infrastructure-as-code for the shared network. This is not just any network, this is the core network connecting all of your applications, services, and end users (wherever they are) together. It should not be touched unless by someone who is responsible for it with a capital ‘R’.

Being responsible for it means understanding its customers and the dependencies that they draw, analyzing and predicting the impact of potential changes as they ripple across the environment—because they will. It also means, helping onboard customers by granting them access to attach to one of the Virtual Hubs. Remember, the Virtual Hubs cost money so you shouldn’t pass those out like candy.

Your applications’s will need to just create a connection.

resource "azurerm_virtual_hub_connection" "core" {

name = "vnet-${var.application_name}-${var.environment_name}"

virtual_hub_id = azurerm_virtual_hub.core.id

remote_virtual_network_id = azurerm_virtual_network.main.id

}

A custom Role Definition that grants just the needed permissions can help the spoke networks attach to the hub without too much friction. The below permissions need to be granted to enable Terraform core workflow to manage Virtual Hub Connections.

Microsoft.Network/virtualHubs/hubVirtualNetworkConnections/readMicrosoft.Network/virtualHubs/hubVirtualNetworkConnections/writeMicrosoft.Network/virtualHubs/hubVirtualNetworkConnections/delete

The service teams that are attaching to your Virtual Hubs will need the identity that they execute Terraform under to be able to perform these operations. Unfortunately, you can scope these operations only to the Subscription, V-WAN, or Virtual Hub which still means that if you use this as the sole mechanism for granting access to the Virtual Hub it is possible that Team A could accidentally delete Team B’s connection if they mis-use the permission. This scenario would be very unlikely when using Terraform because they are only going to be potentially deleting a Virtual Hub Connection that they create. However, if somebody on Team A used the permissions from the Azure CLI, they could definitely do some damage. This is where a combination of RBAC and Azure Policy can get the job done.

The Virtual Hub is hosted in Subscription X and is managed by the Network Platform Team. Teams A and B are operating out of their own subscriptions, Subscriptions A and B. Grant the permissions to both Teams A and B to create, read, delete Virtual Hub Connections on the V-WAN in Subscription X. Then impose an Azure policy with enforcement that only allows Virtual Hub Connections operations to be performed if the target Virtual Network is within the same subscription. This will effectively grant Team A to create virtual Networks in Subscription A and join them to the Virtual Hub in Subscription X but not meddle with the Virtual Hub Connections from other team’s Virtual Networks in other subscriptions.

Changing your V-WAN

With the V-WAN isolated into its own source code repository and automation pipelines that invoke change into the long-lived environments that you manage, it means that the Network Platform Team responsible (with a capital ‘R’) for managing it will have an easier time assessing change requests’ impacts on the broader environment. This is important because, once infrastructure components are layered on top of the network, future changes to it can become challenging due to the dependencies.

For instance, if a region within the V-WAN needs to be removed, any Virtual Networks connected to that region’s Virtual Hub would have to be detached first, or the operation might fail outright. Now this shouldn’t happen very often but it’s definitely something to consider when you are constructing foundational infrastructure layers using Infrastructure-as-Code.

There are scenarios that will happen more often, those include modifications to routing or firewall rules. These types of changes, again, require careful planning and awareness of the implications on downstream networks, applications, and services that rely on the network’s stability and continuity.

Packer Infrastructure

The next essential layer is the Packer infrastructure, which provides a controlled environment for creating, managing and distributing reusable virtual machine images. This layer is based on an Azure Compute Gallery, a private gallery that stores, manages, and replicates these images across different regions supported by the V-WAN. By using this infrastructure, we ensure that all deployed environments have access to the same standardized images supporting consistency across the environment and reducing risks associated with configuration drift.

When Packer runs, it saves the images directly into this gallery. The Compute Gallery then replicates the images across the specified regions. We need to configure our Packer templates to target this Compute Gallery and we can even specify the regions that we want the image replicated to. However, if we aren’t sure at the time of bake, then we can decide later by making updates to the individual Virtual Machine Image Versions to make them available in the appropriate region.

This is the only area that starts to rely pretty heavily on ClickOps. That’s because of the way Packer and Terraform share responsibility. Terraform provisions the Compute Gallery but it also provisions the Image Definitions. Without an Image Definition in the Compute Gallery, Packer can’t do anything. Full stop. Therefore, you will need to plan the image definitions accordingly and stage them within the Compute Gallery before you attempt Packer builds.

The ClickOps aspect comes about with image region replication. Packer doesn’t manage the state of the image versions. It simply stamps out new versions inside the image definition in the Compute Gallery. So let’s say you have a stable, well tested image, let’s call it “v1.0.16” and you have been running with that in production for a few weeks. Now you want to scale out to a new region. The packet template did not include this region originally, hence the image version is not replicated there. Do you bake a new image after updating the packet template? Or do you ClickOps the replicated regions to add the region you want on the known good image? I think the latter. But make sure to update the packer template too so you’ll have new versions in the desired regions going forward!

resource "azurerm_shared_image" "gold" {

name = "acme-gold-ubuntu-2204"

gallery_name = azurerm_shared_image_gallery.main.name

resource_group_name = azurerm_resource_group.main.name

location = azurerm_resource_group.main.location

os_type = "Linux"

identifier {

publisher = "Acme"

offer = "Gold"

sku = "Ubuntu-2204"

}

}

There will likely be a set of images that are build on a regular basis by the Enterprise Platform Team. These images are likely hardened versions of common Operating Systems that are in use within the enterprise.

You will very likely have other teams that want to build their own images. Similar to how we want teams to branch off of the main Virtual Hub, we want teams to branch off of the main Compute Gallery. That means those teams will need to manage their own azurerm_shared_image resources within the Compute Gallery, and their own Packer build pipelines to produce new versions of the image over time.

You should have someone from the Platform Team work with these teams to ensure they follow enterprise naming standards for the images that they publish—otherwise things will get out of hand quickly.

DevOps Infrastructure

Now that we have a network and the ability to create customized Virtual Machine images that are fit for purpose, the first application we need to roll out is one that will enable us to adopt DevOps culture en masse within our organization: pipeline automation.

We have all heard about the new service called “Azure DevOps Managed Pools”. It isn’t supported by the azurerm Terraform provider yet but, of course, it is supported by the azapi provider today. Here’s the documentation.

resource "azapi_resource" "symbolicname" {

type = "Microsoft.DevOpsInfrastructure/pools@2024-10-19"

name = "string"

location = "string"

body = jsonencode({

properties = {

agentProfile = { }

devCenterProjectResourceId = "string"

fabricProfile = { }

maximumConcurrency = int

organizationProfile = { }

provisioningState = "string"

}

})

}

I don’t want to dig into this too much in this article, but just be aware of this as an approach to provision your Azure DevOps Managed Pools. Internally at Microsoft, we have a similar service that we use, but essentially, they all have one thing in common: you have your automation pipelines running on Virtual Machines connected to your network.

With the network and Packer infrastructure in place, the next layer is where your build agents execute–whatever your automation tool happens to be (e.g., Azure DevOps, GitHub Actions, etc.) and whatever mechanism you use to provide compute resources (e.g., VMSS, Managed Pools, Container Apps, etc. ).

This pool of compute connects to the V-WAN through a small, dedicated Virtual Network, ensuring that pipelines can be executed in a secure, controlled manner across various environments. This configuration allows for the management and execution of Infrastructure-as-Code with a secure route for data plane access to critical Azure services like Azure Key Vault, Azure Storage, or event Azure Kubernetes Service (AKS). These services remain securely within the Azure network boundary, as public endpoints are disabled, limiting exposure and enhancing security.

Layering Operational Environments and Applications

Once the foundational network, Packer infrastructure, and DevOps infrastructure are in place, additional operational environments can be layered in for various administrative purposes, as well as for hosting applications and services.

This approach allows the Platform Team to build out a shared infrastructure that enables DevOps culture and practices across the organization’s many Application and Service teams. Those Application Service Teams can spin up different environments, each tailored to specific requirements while leveraging the shared infrastructure. The V-WAN’s secure connectivity from the network layer, the Compute Gallery from the Packer layer, and the Virtual Machine pool from the DevOps layer.

In summary, this layered architecture provides a secure, scalable foundation for a robust DevOps environment in Azure. By building each layer—network, Packer, DevOps—incrementally and with a clear blast radius and minimal dependencies, organizations can achieve an Azure infrastructure that is modular, secure, and highly adaptable to changing requirements. This baseline configuration not only supports efficient and secure infrastructure provisioning but also ensures that deployments remain consistent across regions, enabling rapid scaling and reliable management of Azure resources. I’ve used this same approach internally at Microsoft to great effect in helping a small team manage a robust and expansive set of infrastructure. I hope you find it useful on your Azure journey.

Until Next Time—Happy Azure Terraforming!